C-7

COMPILER INTRINSICS AND FUNCTIONAL EQUIVALENTS

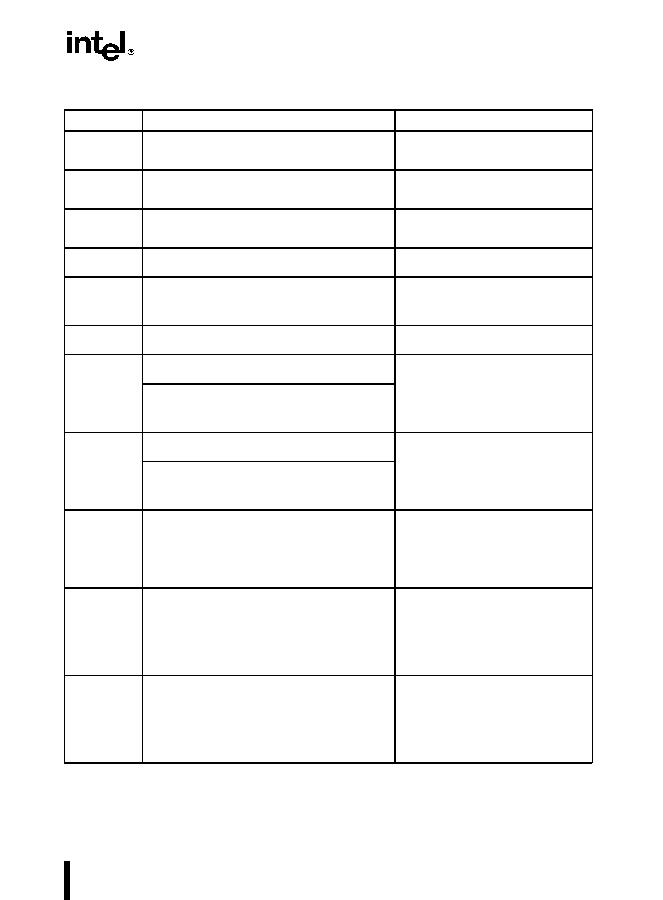

PMULHUW__m64 _m_pmulhuw(__m64 a, __m64 b)

__m64 _mm_mulhi_pu16(__m64 a, __m64 b)

Multiplies the unsigned words in a and b,

returning the upper 16 bits of the 32-bit

intermediate results.

PMULHW__m64 _m_pmulhw(__m64 m1, __m64 m2)

__m64 _mm_mulhi_pi16(__m64 m1, __m64 m2)

Multiply four signed 16-bit values in m1 by

four signed 16-bit values in m2 and

produce the high 16 bits of the four results.

PMULLW__m64 _m_pmullw(__m64 m1, __m64 m2)

__m64 _mm_mullo_pi16(__m64 m1, __m64 m2)

Multiply four 16-bit values in m1 by four 16-

bit values in m2 and produce the low 16

bits of the four results.

POR

__m64 _m_por(__m64 m1, __m64 m2)

__m64 _mm_or_si64(__m64 m1, __m64 m2)

Perform a bitwise OR of the 64-bit value in

m1 with the 64-bit value in m2.

PREFETCHvoid _mm_prefetch(char *a, int sel)

Loads one cache line of data from address

p to a location "closer" to the processor.

The value i specifies the type of prefetch

operation.

PSHUFW__m64 _m_psadbw(__m64 a, __m64 b)

__m64 _mm_sad_pu8(__m64 a, __m64 b)

Returns a combination of the four words of

a. The selector n must be an immediate.

PSLLW

__m64 _m_pshufw(__m64 a, int n)

__m64 _mm_shuffle_pi16(__m64 a, int n)

Shift four 16-bit values in m left the amount

specified by count while shifting in zeroes.

__m64 _m_psllw(__m64 m, __m64 count)

__m64 _mm_sll_pi16(__m64 m, __m64 count)

Shift four 16-bit values in m left the amount

specified by count while shifting in zeroes.

For the best performance, count should be

a constant.

PSLLD

__m64 _m_psllwi (__m64 m, int count)

__m64 _m_slli_pi16(__m64 m, int count)

Shift two 32-bit values in m left the amount

specified by count while shifting in zeroes.

__m64 _m_pslld (__m64 m, __m64 count)

__m64 _m_sll_pi32(__m64 m, __m64 count)

Shift two 32-bit values in m left the amount

specified by count while shifting in zeroes.

For the best performance, count should be

a constant.

PSLLQ

__m64 _m_psllq (__m64 m, __m64 count)

__m64 _mm_sll_si64(__m64 m, __m64 count)

Shift the 64-bit value in m left the amount

specified by count while shifting in zeroes.

__m64 _m_psllqi (__m64 m, int count)

__m64 _mm_slli_si64(__m64 m, int count)

Shift the 64-bit value in m left the amount

specified by count while shifting in zeroes.

For the best performance, count should be

a constant.

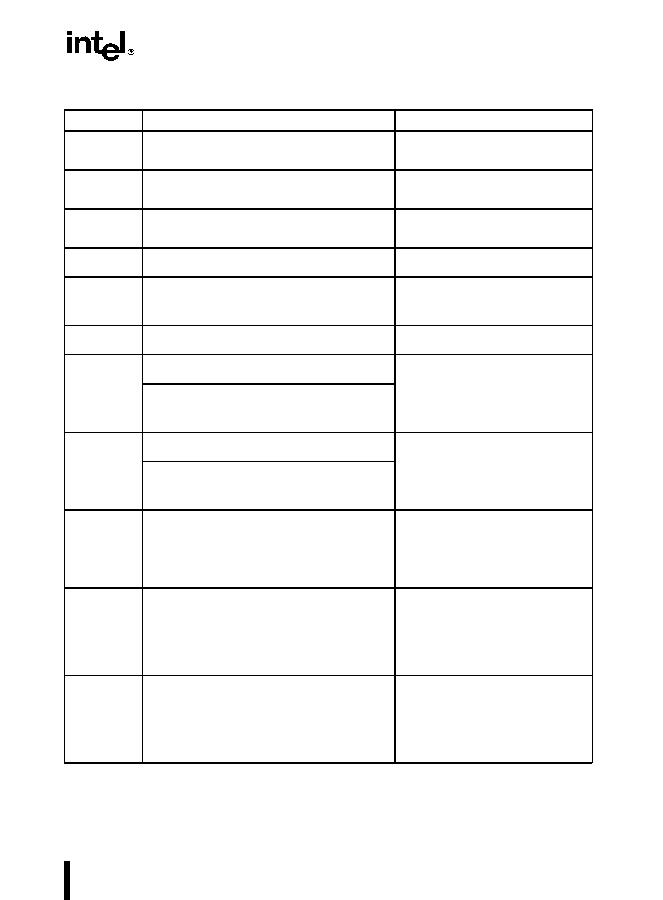

PSRAW

__m64 _m_psraw (__m64 m, __m64 count)

__m64 _mm_sra_pi16(__m64 m, __m64 count)

Shift four 16-bit values in m right the

amount specified by count while shifting in

the sign bit.

__m64 _m_psrawi (__m64 m, int count)

__m64 _mm_srai_pi16(__m64 m, int count)

Shift four 16-bit values in m right the

amount specified by count while shifting in

the sign bit. For the best performance,

count should be a constant.

PSRAD

__m64 _m_psrad (__m64 m, __m64 count)

__m64 _mm_sra_pi32 (__m64 m, __m64 count)

Shift two 32-bit values in m right the

amount specified by count while shifting in

the sign bit.

__m64 _m_psradi (__m64 m, int count)

__m64 _mm_srai_pi32 (__m64 m, int count)

Shift two 32-bit values in m right the

amount specified by count while shifting in

the sign bit. For the best performance,

count should be a constant.

Table C-1. Simple Intrinsics

Mnemonic

Intrinsic

Description